Introduction

We recently had the opportunity to explore, workshop, and implement an OpenAI-powered solution to handle policy documents for one of our customers. The premise was simple enough: search through and index several legal policy documents with the following outcomes in the first instance:

-

- Enable document summarisation and retrieval.

- A Q&A portal for staff to “talk” to their documents.

At the same time, the solution would also need to support a future state that can:

-

- Identify legal policy delegation information from content.

- Identify new policy approvers.

- Allow “BYO” document Q&A.

- Have an auditable log of chat history.

- Enable and empower “Power Users”.

This obviously was a task for a Large Language Model (LLM), and with the users already in the Azure ecosystem, a solution built around Azure OpenAI and its LLM offerings made perfect sense.

In this article, the first in a series on Azure OpenAI, we go over the design patterns, processes, and solutions that we went through to achieve the goals listed above. It should be noted at this stage that all these solutions had the following prerequisites:

-

- Review and accept the Microsoft’s “Responsible AI terms”.

- Request and create an OpenAI resource on the Azure tenancy.

- Design and deploy a well-architected Azure Landing Zone to serve as the base of operations.

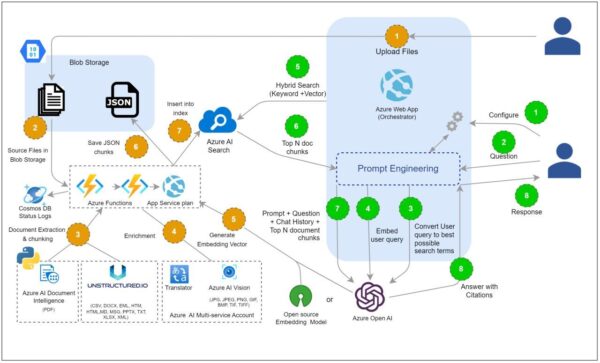

Microsoft Information Assistant

Microsoft’s Information Assistant is a packaged solution that is designed to accelerate “time-to-value” and showcase Azure OpenAI’s large language models. This utilises the Retrieval Augmented Generation (RAG) pattern, Azure AI Search for data indexing and retrieval, Azure OpenAI’s GPT3.5 to generate answers, and a chatbot front-end interface to bring this all together.

Being an “all-in-one” solution, Information Assistant was quick and easy to deploy and get started (provided the prerequisites were met), provided an easy interface to upload and manage documents, and supported a range of document types. The front-end chat interface is hosted over a web service and did an exceptional job with citations and references.

However, some parts of the solution were partially hidden from view from an engineering perspective – Prompt engineering is built in as part of the code deployment. This meant changing personas or tweaking the chatbot required redeploying parts or all the solution, which would in turn result in downtimes and a lower degree of flexibility and customisation. We also noticed that the bot would occasionally hallucinate and return answers that were entirely out of context – another result of prompt engineering being obfuscated from the user.

Figure 1. Microsoft Information Assistant architecture.

Azure Search OpenAI Demo

This solution is a bit more general purpose and was provided by Microsoft as a quick starter demo. Unlike the previous solution, it was a lot more basic but shared several of the same concepts, such as the RAG model, Azure AI Search for indexing and retrieval, an app server, and UX. This made it easy to deploy, and the built-in prompt engineering scripts made this solution a little bit better conversationally, but this was not without its own flaws.

As with the previous solution, prompt engineering for tweaking the chatbot and personas meant redeploying parts of the solution making the application a black box from a power user’s point of view. This also does not provide a document management system out of the box. However, being much lighter than the Information Assistant in terms of components, it is much easier and quicker to make changes and redeploy.

Copilot for Microsoft 365

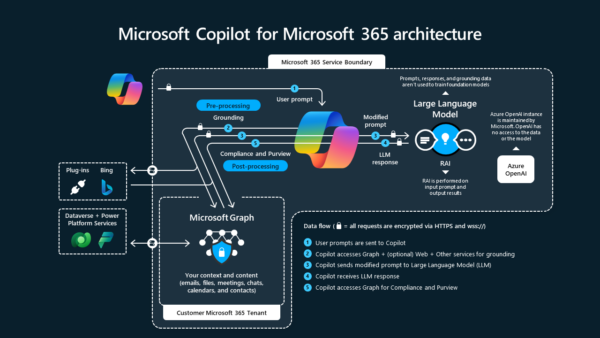

With all these requirements for an AI assistant over an organisation’s documents, it is worth mentioning Copilot for Microsoft 365. Copilot for M365 comes at an additional licensing fee for eligible enterprise, business, or education customers. Copilot is designed as a general-purpose AI solution across an entire M365/O365 suite of applications, including Sharepoint. While this would serve the purpose of providing an LLM and searching over your documents, it seemed overkill for what the objectives of this exercise were. Another major issue is that since it indexes a wide range of files on Sharepoint, quality control becomes an issue with the AI returning results from draft or obsolete versions of documents. Copilot also lacks a power user interface where they can customise a chatbot persona to suit a particular business use case. As an aside, this also comes with a steep per user per-month fee, which could make rollout and implementation an expensive exercise.

Figure 2 Copilot for Microsoft 365 architecture.

Azure ML Studio & Prompt Flows

Having gone through all these possible solutions, we eventually ended up with the basics. Create a chatbot on Azure ML Studio using Prompt Flows. This too would follow the same design patterns as the RAG model, use Azure AI Search to crawl and index documents hosted on Azure Data Lake, and make use of the GPT and text embedding models from Azure Open AI. As an added benefit, this solution would provide a higher level of customisation and access to tweak personas with prompt engineering. Being a solution built from scratch on a platform designed for a low-code approach, this creates opportunities for power users to build their custom chatbots quite easily with a little user training. To support future use cases, ML Studio also allows you to fine-tune existing models to suit new use cases.

This does come with a downside: a longer time-to-value period when the amount of development, engineering, deployment, and rollout is taken into consideration. However, the adage “measure twice, cut once” holds true here. In the long run, a made-for-purpose solution built on a stable and well-designed platform will save both time and money.

No two organisations’ requirements are identical. To achieve the best results, it is important to be clear on what the objectives are, who will use the product, and how they would want to use it before deciding on a solution. With platforms like Azure OpenAI making AI and LLMs easily accessible, initiatives like these are more of a data engineering and data quality problem rather than a data science problem. And they must be approached as such.

Empowering Businesses with Azure OpenAI

Integrating Azure OpenAI, as demonstrated by the team, represents a strategic shift towards a knowledge-centric business approach. This technology enables more than just advanced document management; it’s a gateway to transforming how businesses engage with information. For example, in healthcare, it can streamline patient record access, improving care quality, while in construction, it can simplify navigating complex regulatory documents.

Stay tuned to this space to learn more about Azure OpenAI, concepts such as RAG and fine-tuning, document indexing, and how they can be applied to your data. Reach out to us today for a chat and/or evaluation of your AI needs and desires and how the experts at Adaptiv can help you make the most of your data.