Organisations across finance, healthcare, retail, manufacturing, and beyond depend on a complex ecosystem of legacy applications, cloud services, APIs, and data stores that must coordinate seamlessly. Developers and architects wrestling with these scenarios face an array of challenges: managing state across asynchronous boundaries, orchestrating long-running processes, implementing custom retry and error-handling logic, and scaling without ballooning infrastructure costs.

Azure Durable Functions and Logic Apps combine to address these pain points. Durable Functions let you write stateful workflows in a serverless environment with built-in checkpoints, timers, and automatic restarts. Logic Apps provides a rich, low-code designer and hundreds of connectors for rapid SaaS and on-premises integrations. Together, they form a balanced toolkit for building resilient, maintainable enterprise integration platforms.

Cloud architects, integration specialists, and enterprise developers building or modernising integration platforms regardless of industry will find value here. Whether you’re coordinating microservices, automating complex business workflows, or bridging cloud and on-premises systems, the concepts and patterns described will apply.

In this two-part series, you’ll gain a comprehensive understanding of Azure Durable Functions:

Part 1 (this post) covers:

- Core capabilities of Durable Functions and how they differ from standard Azure Functions

- The fundamental architecture and components that power stateful orchestration

- When to choose Durable Functions versus Logic Apps

- Decision-making criteria for enterprise integration scenarios

Part 2 will dive into:

- Detailed implementation patterns with complete code examples

- Real-world enterprise integration scenarios

- Best practices for production deployments

- Monitoring and troubleshooting strategies

What are Azure Durable Functions?

Azure Durable Functions is an advanced extension of Azure Functions that empowers developers to write stateful workflows within a serverless, stateless compute environment. While traditional Azure Functions are ideal for short-lived, event-driven tasks, they fall short when it comes to orchestrating complex, long-running processes that require persistence, coordination, and reliability.

Durable Functions fill this gap by introducing a robust programming model that supports function chaining, fan-out/fan-in patterns, human interaction, and time-based triggers, all while automatically managing state, checkpoints, and restarts behind the scenes. This abstraction allows developers to focus purely on business logic without worrying about the underlying infrastructure or workflow durability.

In enterprise integration scenarios where systems must communicate reliably across asynchronous boundaries and handle failures gracefully, Durable Functions shine. If your Azure Function host crashes during execution, traditional functions fail completely. Durable Functions automatically resume from their last checkpoint, ensuring workflow reliability even in the face of infrastructure failures.

For example, consider a large-scale e-commerce system where a single customer order may trigger a complex workflow that involves validating inventory, processing payment with an external gateway, and coordinating with multiple logistics providers for shipping. Durable Functions provides the reliability to ensure that even if one of these steps fails or takes a long time, the overall workflow state is preserved and can be resumed or retried.

Core Components of Azure Durable Functions

Durable Functions are built on key components that work together to enable stateful orchestration. Understanding these core components is essential, as they form the foundation for the powerful orchestration capabilities that make Durable Functions ideal for enterprise scenarios.

Orchestration Functions

The orchestrator function is the heart of any durable function workflow. It defines the control flow of the application by calling activity functions, handling external events, and managing timers. Orchestrator functions must be deterministic and can replay their execution history when needed.

Key characteristics:

- Define the workflow logic and sequence of operations

- Must be deterministic (produce same result given same input)

- Can call activity functions, wait for events, and create timers

- Automatically checkpoint progress at each await statement

- Can replay execution to recover from failures

Typical responsibilities:

- Coordinate multiple activity functions

- Implement branching logic based on business rules

- Handle timeouts and external events

- Manage long-running processes with built-in state management

Example: Retail Order Processing Workflow

The orchestrator function below defines the high-level steps required to process a customer order in a Durable Functions workflow. It ensures that each step completes successfully before moving on to the next, with the workflow progressing only if the previous stage is successful.

In the example below:

- The orchestrator starts by calling the ValidateInventory activity function to check whether the product exists in stock.

- If the inventory is insufficient, it returns “Order failed: Insufficient inventory” to the caller and stops further processing.

- If the product is available, it proceeds to the ProcessPayment activity function.

- If the payment fails, it returns “Order failed: Payment declined”.

- If the payment is successful, it continues to the ShipOrder activity function to initiate shipping.

At each activity function call, the current state of the orchestration is persisted by the Durable Task framework. This ensures that in case of retries, failures, or restarts, the orchestrator resumes from the last successful checkpoint, rather than starting over. This reliable and stateful pattern ensures consistency, fault tolerance, and support in long-running workflows.

[FunctionName("ProcessOrderOrchestrator")]

public static async Task<string> ProcessOrder(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

var order = context.GetInput<Order>();

// Validate inventory

var inventoryResult = await context.CallActivityAsync<bool>(

"ValidateInventory", order.ProductId);

if (!inventoryResult)

return "Order failed: Insufficient inventory";

// Process payment

var paymentResult = await context.CallActivityAsync<PaymentResult>(

"ProcessPayment", order);

if (!paymentResult.Success)

return "Order failed: Payment declined";

// Ship order

await context.CallActivityAsync("ShipOrder", order);

return "Order processed successfully";

}Activity Functions

Activity functions are the basic unit of work in a durable function application. They perform the actual business logic and can be called by orchestrator functions. Unlike orchestrators, activity functions are not subject to deterministic execution constraints, meaning they can safely perform non-deterministic operations like making API calls or interacting with a database.

Key characteristics:

- Execute the actual work (API calls, database operations, calculations)

- Can perform non-deterministic operations

- Should be designed for idempotency to handle retries safely

- Return results to the orchestrator

- Can be called in sequence or parallel

Typical use cases:

- Calling external APIs or services

- Database operations (read/write)

- File processing and transformations

- Business logic calculations

- Integration with third-party systems

Example: Interacting with External Services

In a real-world scenario, these activities handle the integrations with other systems.

Activity Function 1: ValidateInventory

The ValidateInventory activity function checks if the ordered product is available in inventory. It communicates with an external inventory service to verify the product’s availability. If the inventory is insufficient, the orchestrator function can return an error message or handle the failure appropriately (e.g., Order failed: Insufficient inventory).

Activity Function 2: ProcessPayment

The ProcessPayment activity function handles the processing of the customer’s payment through an external payment service. If the payment fails (i.e., Success = false), the orchestrator can return an error message like Order failed: Payment declined to notify the user.

[FunctionName("ValidateInventory")]

public static async Task<bool> ValidateInventory(

[ActivityTrigger] string productId,

ILogger log)

{

// Call external inventory service

var inventoryService = new InventoryService();

return await inventoryService.CheckAvailabilityAsync(productId);

}

[FunctionName("ProcessPayment")]

public static async Task<PaymentResult> ProcessPayment(

[ActivityTrigger] Order order,

ILogger log)

{

// Call external payment service

var paymentService = new PaymentService();

return await paymentService.ProcessAsync(order.PaymentDetails);

}Client Functions

Client functions are responsible for starting, managing, and monitoring orchestrator instances in Durable Functions. These functions provide an interface between the external world (e.g., a web application, mobile app, or external system) and the durable workflows.

Key responsibilities:

- Initiate new orchestration instances

- Query orchestration status

- Send external events to running orchestrations

- Terminate or restart orchestrations

- Provide HTTP endpoints for workflow management

Common patterns:

- HTTP-triggered endpoints that start workflows

- Timer-triggered batch processors

- Event Grid or Service Bus triggered initiators

- Management APIs for workflow monitoring

Example: Http-Triggered API Endpoint

In a retail system, an API endpoint could act as a client function to initiate a new order processing workflow from a website or mobile app. In the example below,

- HTTP Trigger: The client function is triggered by an HTTP POST request. When a customer places an order, the front-end (e.g., website or mobile app) calls this endpoint.

- StartOrchestration:

- The HttpStart function receives the order data from the request body.

- It then uses the DurableOrchestrationClient (starter) to start a new orchestration by calling StartNewAsync. This method triggers the “ProcessOrderOrchestrator” orchestration function, passing in the order data.

- Check Status:

- The CreateCheckStatusResponse method returns a status URL to the caller. This URL can be used by clients to check the status of the orchestration (e.g., is it running, completed, or failed).

- This is useful for asynchronous workflows, where the client may want to track the status of the order without waiting for it to finish.

[FunctionName("StartOrderProcessing")]

public static async Task<IActionResult> HttpStart(

[HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequest req,

[DurableClient] IDurableOrchestrationClient starter)

{

var order = await req.GetJsonAsync<Order>();

string instanceId = await starter.StartNewAsync("ProcessOrderOrchestrator", order);

return starter.CreateCheckStatusResponse(req, instanceId);

}

Sample Response from the CreateCheckStatusResponse for the instanceId:

{

"id": "abc123”, // Instance ID of the orchestration

"statusQueryGetUri": "https://<your-function-app-url>/api/orchestrators/ProcessOrderOrchestrator/abc123",

"sendEventPostUri": "https://<your-function-app-url>/api/orchestrators/ProcessOrderOrchestrator/abc123/events",

"terminatePostUri": "https://<your-function-app-url>/api/orchestrators/ProcessOrderOrchestrator/abc123/terminate"

}Entity Functions

Entity functions manage small, durable pieces of state in a distributed and consistent manner. They’re ideal for scenarios like maintaining counters, managing aggregates, or tracking workflow states that must persist across function executions.

Key Characteristics:

- Stateful & Durable: Each entity maintains its own durable state that survives restarts.

- Encapsulated: The entity owns its state and updates it through defined operations.

- Event-driven: Entities respond to operations (events) and can be triggered externally.

Use cases:

- Maintaining counters across distributed operations

- Implementing distributed locks

- Managing aggregate state (shopping carts, user sessions)

- Coordinating access to shared resources

Example: Managing Payment Processing State with External API

In this example, let’s assume we are working with an external API (eg: Payment gateway), and we need to manage the state of payment transactions. We will create an entity function that tracks the state of a payment (whether its pending, completed, or failed), ensuring consistent state management across multiple API calls.

[FunctionName("PaymentTransactionEntity")]

public static void Run(

[EntityTrigger] IDurableEntityContext context)

{

// Get the current state (default to a new PaymentTransaction if not set)

var transaction = context.GetState<PaymentTransaction>() ?? new PaymentTransaction();

// Operation: Start payment

if (context.OperationName == "StartPayment")

{

var paymentDetails = context.GetInput<PaymentDetails>();

transaction.StartPayment(paymentDetails);

}

// Operation: Update payment status

else if (context.OperationName == "UpdatePaymentStatus")

{

var paymentStatus = context.GetInput<PaymentStatus>();

transaction.UpdateStatus(paymentStatus);

}

// Operation: Get current payment status

else if (context.OperationName == "GetPaymentStatus")

{

context.SetState(transaction);

return transaction; // Return current state of payment

}

// Save updated transaction state

context.SetState(transaction);

}

public class PaymentTransaction

{

public string TransactionId { get; set; }

public string Status { get; set; } = "Pending"; // Initial state is Pending

public PaymentDetails PaymentDetails { get; set; }

public void StartPayment(PaymentDetails details)

{

// Simulate starting a payment process (this could call an external payment API)

Status = "Processing";

PaymentDetails = details;

// Assume here you’d call the external payment service.

}

// Update payment status based on external API response

public void UpdateStatus(PaymentStatus status) => Status = status.Status;

}This example simulates managing a payment transaction using an entity function in Durable Functions. It could be part of an API-based integration scenario where external API calls are used for payment processing.

How it Works:

- Entity Function Trigger:

- The PaymentTransactionEntity function listens for different operations on the entity, such as StartPayment, UpdatePaymentStatus, and GetPaymentStatus.

- Operations:

- StartPayment: This operation simulates starting the payment process. When the StartPayment operation is called, the payment status is updated to “Processing”, and the details of the payment are saved (e.g., amount, method).

- UpdatePaymentStatus: This operation updates the payment status based on the result from an external payment API (e.g., “Completed”, “Failed”, etc.).

- GetPaymentStatus: This operation returns the current status of the payment transaction (e.g., “Pending”, “Processing”, “Completed”).

- State Management:

- The state of the payment (PaymentTransaction) is persisted across operations using context.SetState(), ensuring the state remains consistent even if the function crashes or the application restarts.

- External API Integration:

- When the StartPayment operation is triggered, it simulates calling an external payment service to process the payment. In a real-world scenario, you’d likely call an API like Stripe or PayPal here.

- The UpdatePaymentStatus operation is where the external API’s response (whether the payment was successful or failed) would be processed and reflected in the entity’s state.

How this works with the Client Functions:

Imagine you have a client function that triggers this entity function to start the payment process, such as an API endpoint that receives a payment request.

[FunctionName("StartPaymentTransaction")]

public static async Task<IActionResult> StartPayment(

[HttpTrigger(AuthorizationLevel.Function, "post")] HttpRequest req,

[DurableClient] IDurableEntityClient entityClient)

{

var paymentDetails = await req.ReadFromJsonAsync<PaymentDetails>();

string entityId = paymentDetails.TransactionId;

await entityClient.SignalEntityAsync(entityId, "StartPayment", paymentDetails);

return new OkObjectResult($"Payment started. Track status at: /api/status/{entityId}");

}

[FunctionName("GetPaymentStatus")]

public static async Task<IActionResult> GetPaymentStatus(

[HttpTrigger(AuthorizationLevel.Function, "get")] HttpRequest req,

[DurableClient] IDurableEntityClient entityClient)

{

var entityId = req.Query["transactionId"];

var state = await entityClient.ReadEntityStateAsync<PaymentTransaction>(entityId);

return new OkObjectResult(state.EntityState);

}Explanation of Client Functions:

- StartPaymentTransaction

- The API endpoint receives payment details and triggers the PaymentTransactionEntity by sending the StartPayment operation. The client function sends the payment details to the entity to begin processing.

- A status URL is returned that the client can use to check the payment status.

- GetPaymentStatus:

- Another API endpoint allows the client to check the status of the payment. The client can use the transactionId to query the entity function and retrieve the current state of the payment (whether it’s in progress, completed, or failed).

Key Benefits in this Scenario:

- Distributed State Management: The payment state is stored within the entity, allowing the system to manage the state of each payment transaction independently.

- Durability: If there’s an issue with the system (e.g., the function crashes or the server is restarted), the state of the payment transaction is durable and will persist across failures. This ensures consistency for each transaction.

- Asynchronous Integration: The payment system is likely asynchronous, with external APIs taking time to respond. Using entity functions allows the system to track the progress of the payment and interact with the external API in an event-driven manner.

- Concurrency: Multiple transactions can be processed concurrently, as each transaction is isolated by its entity ID, which means multiple payments can be handled independently without interference.

Task Hubs

Task Hubs are the engine that manages the state of all durable functions. A Task Hub is a logical container for all the underlying storage resources (queues, tables, and blobs) used by a Durable Function application. It persists the state of orchestrations and messages between functions, ensuring that a workflow can survive a host restart and resume from where it left off.

Key characteristics:

- Isolation: Task Hubs provide isolation between different Durable Function applications. This means multiple Durable Functions can share the same underlying storage account but can still maintain their own independent execution contexts.

- Orchestration History and State: Task Hubs store the history and state of orchestrations, including their current status (e.g., whether an orchestration is in progress, completed, or failed) and all events associated with its execution. This state persistence allows Durable Functions to recover gracefully after failures and host restarts.

- Work Queues for Activity Functions: Activity functions are executed as part of orchestrations. Task Hubs manage the work queues for these activity functions, enabling them to execute in the right order based on the orchestration’s control flow.

- Replay and Recovery: Task Hubs provide a mechanism for replay and recovery. When an orchestration is triggered, it begins by replaying the events in order to recreate the state. If any part of the orchestration fails or a host restart occurs, it can recover from the last successful checkpoint, ensuring no loss of data and no need to start from scratch.

- Multiple applications can share storage accounts using different Task Hubs. While Task Hubs help isolate workflows, multiple Durable Function applications can use the same underlying storage account by configuring each application to use a separate Task Hub. This way, different applications can interact with the same storage resources without interference.

How Task Hubs Work:

When a Durable Function orchestrator is triggered, it uses the Task Hub to:

- Store Orchestration State: The orchestrator stores the execution history, progress, and state in the Task Hub’s underlying storage. The history is crucial because it allows the orchestrator to replay its events in case of a failure, thereby resuming from where it left off.

- Enqueue Activity Functions: Task Hubs manage the work queues for activity functions. When an orchestrator function calls an activity function, the task hub places the work in a queue, which is then processed by the activity function workers. This ensures that the work is done in the right sequence.

- Replay Mechanism: If the orchestrator needs to be replayed (due to a failure, timeout, or host restart), the Task Hub ensures that the orchestrator is replayed from the stored history, reconstructing the state of the orchestration before it continues.

Example of Task Hub in Action:

Let us use a retail order processing scenario to demonstrate how Task Hubs help manage the orchestration of tasks and ensure durability. In this example:

- The orchestrator function processes an order and calls an activity function to validate the inventory, process the payment, and ship the order.

- Task Hubs manage the state of the orchestration, ensuring that each task’s state is stored and the orchestration can resume if there’s a failure.

Example: Order Processing Workflow

Let’s assume the following structure for the orchestrator and activity functions.

Orchestrator Function (OrderProcessingOrchestrator.cs):

[FunctionName("OrderProcessingOrchestrator")]

public static async Task<string> ProcessOrder(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

var order = context.GetInput<Order>();

// Step 1: Validate inventory

var inventoryAvailable = await context.CallActivityAsync<bool>("ValidateInventory", order.ProductId);

if (!inventoryAvailable)

{

return "Order failed: Insufficient inventory";

}

// Step 2: Process payment

var paymentResult = await context.CallActivityAsync<PaymentResult>("ProcessPayment", order);

if (!paymentResult.Success)

{

return "Order failed: Payment declined";

}

// Step 3: Ship order

await context.CallActivityAsync("ShipOrder", order);

return "Order processed successfully";

}Activity Functions (InventoryValidation, PaymentProcessing, ShipOrder):

[FunctionName("ValidateInventory")]

public static async Task<bool> ValidateInventory([ActivityTrigger] string productId)

{

// Call external inventory service to check if product is available

var inventoryService = new InventoryService();

return await inventoryService.CheckAvailabilityAsync(productId);

}

[FunctionName("ProcessPayment")]

public static async Task<PaymentResult> ProcessPayment([ActivityTrigger] Order order)

{

// Call external payment API to process payment

var paymentService = new PaymentService();

return await paymentService.ProcessPaymentAsync(order.PaymentDetails);

}

[FunctionName("ShipOrder")]

public static async Task ShipOrder([ActivityTrigger] Order order)

{

// Call external shipping API to ship the order

var shippingService = new ShippingService();

await shippingService.ShipOrderAsync(order);

}Explanation of Task Hub in This Scenario:

- Task Hub and Orchestration State:

- When the orchestrator function (ProcessOrder) is triggered, it interacts with Task Hub to store the orchestration state. For each activity function call (ValidateInventory, ProcessPayment, ShipOrder), the Task Hub stores the current state and execution history.

- If the orchestrator fails at any point (for example, if the payment service fails), Task Hub will replay the orchestration from the last checkpoint and retry the task.

- Activity Queues:

- The Task Hub manages work queues for the activity functions. For example, when the orchestrator calls ValidateInventory, the Task Hub places this task in a queue, and an activity function worker picks it up for execution.

- If the system experiences a failure, Task Hub will ensure that these tasks are retried, maintaining consistency and preventing the loss of critical operations.

- Isolation:

- The Task Hub provides isolation between different Durable Function applications. For example, multiple workflows for different order processing systems (or even different applications within the same organisation) can share the same underlying storage account but still run independently, using their own Task Hubs.

- Replay and Recovery:

- Task Hubs enable replay: if the orchestrator fails, it can replay the orchestration from the stored events in the Task Hub, ensuring that it resumes from the last known good state (e.g., the last successful inventory check) rather than restarting the entire process.

Managing Task Hubs:

In Azure Durable Functions, Task Hubs are defined by the storage account connection and Task Hub name. Each Durable Function application uses a unique Task Hub name, which helps in isolating orchestration data and providing separation of state across different applications or workflows.

Task Hubs can be configured in the host.json configuration file:

{

"durableTask": {

"hubName": "OrderProcessingHub”, // This is the Task Hub name

"storageProvider": {

"connectionStringName": "AzureFunctionStorage"

}

}

}

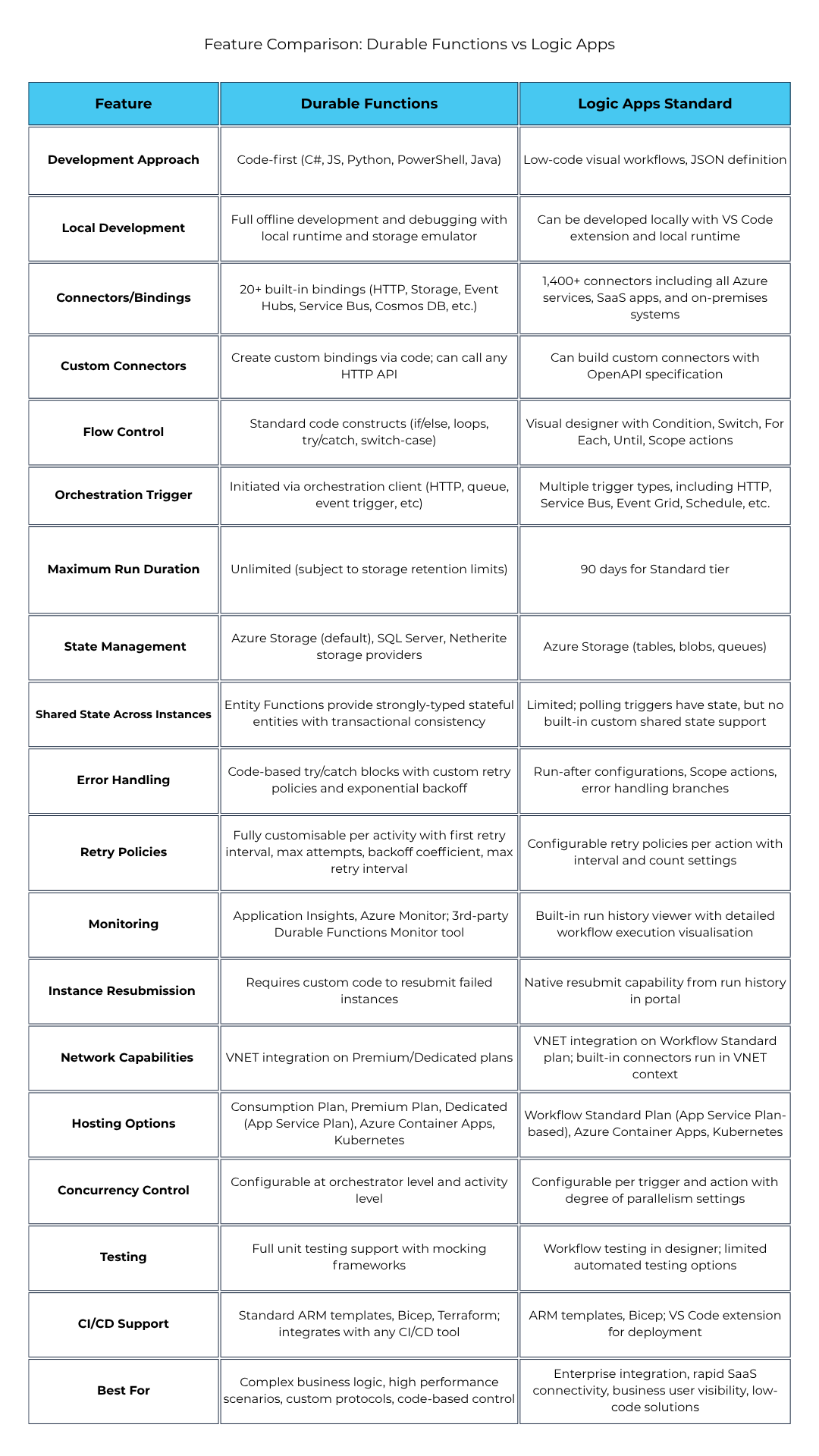

Durable Functions vs Logic Apps Standard: A Practical Comparison

Many teams ask, why choose Durable Functions when Logic Apps Standard exists? Both offer stateful workflows for enterprise integration, but they are designed to solve different classes of problems. Logic Apps offers a great low-code experience and hundreds of connectors, which makes business users happy.

When Logic Apps Becomes Challenging

Logic Apps excels for SaaS integrations, but they can become unwieldy when workflows grow complex. Nested conditions, evolving business rules, and expression language limitations often lead to brittle, hard-to-maintain solutions.

Let me share a story from a recent project. We were building an order processing system that needed to handle complex business rules, custom retry logic, and intricate data transformations. Initially, we went with Logic Apps because, well, it seemed like the obvious choice – enterprise connectors, visual designer, everyone was happy.

Three months later, we were drowning in a sea of nested conditions, trying to debug workflows that looked like spaghetti diagrams, and constantly hitting the expression language limitations. The business rules were evolving rapidly, and what started as simple workflows became unmaintainable monsters.

That’s when we discovered Durable Functions could elegantly solve what Logic Apps made complex.

When Durable Functions Shine

In enterprise applications, business logic is rarely simple, and it’s definitely not static. With Durable Functions, you’re writing actual code.

If there’s a need to implement a complex pricing algorithm that factors in customer tier, seasonal adjustments, bulk discounts, and regional variations with multiple business rules, it becomes quite complex doing that elegantly in Logic Apps expressions, creating very complex workflows that are hard to maintain and debug.

With Durable Functions, complex logic becomes straightforward code that’s easy to test, maintain, and evolve with changing requirements.

Advanced Error Handling and Retry Strategies

Logic Apps has retry policies, sure, but what happens when you need different retry strategies for different types of failures? What if a payment gateway failure should be retried immediately, but an inventory check failure should wait 30 seconds, and a third-party API rate limit should back off exponentially?

Durable Functions allow you to configure different retry policies at the orchestrator level with granular control over timing, backoff strategies, and exception handling. This level of control is simply not feasible in Logic Apps without creating a mess of conditions and parallel branches. Durable Functions excel in the following scenarios

- Complex, evolving business logic expressed naturally in code

- Custom retry strategies and sophisticated error handling

- Performance at scale, avoiding per-action costs of Logic Apps

- Testability and debugging with unit tests and IDE breakpoints

- Fine-grained control over execution flow and state management

Example:

Activity Trigger function showing the RetryOptions and BackoffCoefficient which can easily be configured.

[RetryOptions(MaxRetryCount = 3, BackoffCoefficient = 2.0)]

public static async Task<PaymentResult> ProcessPayment([ActivityTrigger] PaymentRequest request)

{

try

{

return await paymentGateway.ProcessAsync(request);

}

catch (RateLimitException)

{

// Custom logic for rate limiting

await Task.Delay(TimeSpan.FromMinutes(1));

throw; // This will trigger the retry with backoff

}

catch (PaymentDeclinedException ex)

{

return new PaymentResult { Success = false, Reason = ex.Message }; // Don't retry declined payments

}

}You can also configure different retry policies at the orchestrator level

Note: Example below shows only part of Orchestrator function

// Custom retry policy for specific activities

var retryOptions = new RetryOptions( firstRetryInterval: TimeSpan.FromSeconds(5), maxRetryInterval: TimeSpan.FromMinutes(5), backoffCoefficient: 2.0, maxRetryCount: 10);

var result = await context.CallActivityWithRetryAsync<PaymentResult>( "ProcessPayment", retryOptions, order);This level of control is just not feasible in Logic Apps without creating a mess of conditions and parallel branches.

Where Logic Apps Wins

Rapid SaaS Integration with 400+ Connectors

Need to connect to Salesforce, SharePoint, or any of the 400+ connectors? Logic Apps will have you up and running in minutes. With Durable Functions, you’re writing custom HTTP clients and dealing with authentication flows.

Business-User Visibility and Modification

When business users need to see, understand, or even modify workflows, the visual designer is unbeatable. The graphical representation makes workflows accessible to non-developers.

Simple, Linear Workflows

For straightforward “when this happens, do that” scenarios, Logic Apps is perfect. File uploaded → transform data → send email. Logic Apps excels here with minimal configuration and maximum clarity.

The Sweet Spot for Durable Functions

After working with both extensively, I’ve found Durable Functions hit their sweet spot in these scenarios:

- Complex, Multi-Step Business Processes: Order processing, loan approvals, customer onboarding with multiple decision points

- Long-Running Workflows with Human Interaction: When you need to wait for approvals, external events, or manual interventions

- High-Performance Integration Scenarios: When you’re processing hundreds or thousands of items and need optimal performance

- Custom Integration Logic: When you’re integrating with systems that don’t have Logic Apps connectors or need custom protocols

- Evolving Business Rules: When requirements change frequently and you need the flexibility of code

Making the Decision

Choosing the right tool – Durable Functions, Logic Apps, or a combination of both – depends on specific business requirements, not just personal preference or technology preference.

Table 1: Feature Comparison – Durable Functions vs Logic Apps

The Hybrid Approach

In practice, many enterprises benefit from using both. Logic Apps for simple, connector-driven workflows and Durable Functions for the advanced, complex orchestrations and custom logic. This balanced toolkit allows you to build solutions that are both scalable and maintainable by using the right tool for the right job.

What’s Next

Now that we’ve covered the architecture, components, and when to use Durable Functions, next is a deeper dive into the practical implementation details.

In Part 2, we’ll explore:

- Detailed Implementation Patterns: Function Chaining, Fan-Out/Fan-In, Async HTTP APIs, Human Interaction, Saga Pattern, and Event-Driven Integration with complete code examples

- Real-World Enterprise Scenarios: Step-by-step implementations of order processing, parallel validation, approval workflows, and distributed transactions

- Best Practices: Keeping orchestrators deterministic, designing for idempotency, error handling strategies, and monitoring approaches

- Production Considerations: Performance optimisation, cost management, and deployment strategies

The patterns and practices in Part 2 represent proven solutions to real-world integration challenges that will help you build robust enterprise integration platforms.

Continue to Part 2 to see these concepts in action with detailed code examples and implementation patterns.